Table of Content:

Table of Content:

The development of low-code technology has been revolutionary for nearly every tech-based enterprise around the world. With low-code technology, firms of all kinds can save a considerable amount of money on their ETL (extract, transform, load) processes and can significantly improve their overall operating processes

As a result, low-code technology—whether it is provided by the Visual Flow platform or anything else—has become an increasingly important component of the broader data management process. When scaled to the enterprise level, switching from a “traditional” code platform to a low-code platform can help enterprises across several industries save millions of dollars—or even tens of millions of dollars—on their data management processes every year.

Of course, if your firm is deciding whether switching to a low-code platform makes sense for you, there are a lot of things you’ll need to consider. This includes the end goal(s) of the platform you are coding (internal, sales, marketing, etc.), your current budget, and the size of the enterprise.

Nevertheless, as we will further discuss throughout this comprehensive guide, there are several reasons why small, medium, and large digital enterprises continue choosing to use a low-code alternative. Whether it is through the use of Visual Flow or any other alternative, selecting the low-code option has proven to be incredibly beneficial.

Benefits of Low-Code for Apache Spark

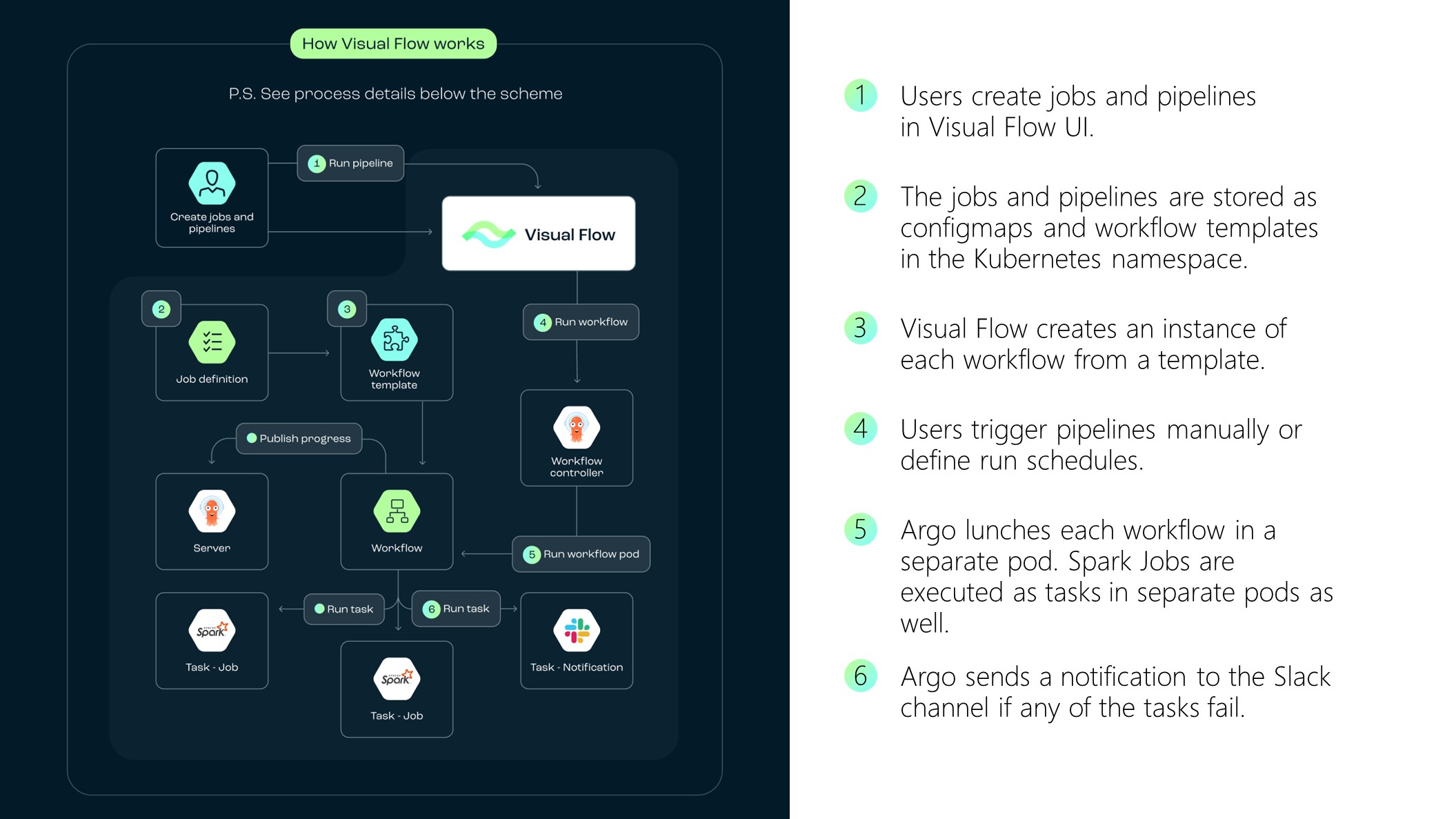

We start our cloud ETL tools comparison with Visual Flow. This cloud-based ETL tool from IBA Group incorporates Apache Spark, Kubernetes, and Argo Workflows. By mastering Visual Flow, you reap the benefits of all three software products. It’s an excellent choice for those who don’t want to spend time and money on complicated and expensive tools with redundant features or build the solution on their own. Moreover, you won’t have to spend money hiring new developers or training them because this tool doesn’t require any coding skills.

It’s a simple ETL solution covering all your data preparation and communication needs. The cloud-native tool requires no additional development, and there is no need to replace it when the project scales.

The entire Apache Spark platform was designed to address several problems that had previously plagued the data storage industry. General inefficiencies, high costs, counter-intuitive user interfaces, and other widespread issues all demanded a need for change. Since its initial release, Apache Spark has been considered an optimal data storage solution. The so-called “stable release” of Apache Spark, which occurred in April 2023, helped grow the popularity of this particular data integration solution even further.

The benefits of Apache Spark are numerous and well-documented. But since the original launch of this platform, users have become increasingly curious about how they can maximize their efficiency while using Apache Spark. As countless case studies have helped reveal, one of the best ways to maximize efficiency is through the use of low-code technologies, such as Visual Flow.

Generally speaking, the term low-code development platform (LCDP) can be used to describe any system that enables users to change the code associated with a specific page or platform using a graphic or otherwise simplified interface. This means that instead of having to manually enter any coding changes—which used to be the universal norm—users can simply click and drag certain components of data, as needed.

As you’d probably expect, switching to a low-code platform provides an astounding number of benefits. These include:

- Streamlined Development Process: without low-code technology, most enterprises will end up finding themselves significantly hindered. The alternative—sometimes referred to as “high code”—usually involves significant delays in structuring the data storage process, as well as various other inefficiencies. In most cases, these inefficiencies are entirely unnecessary; there are very few processes and tasks that cannot be achieved with a modern low-code alternative, meaning that most traditional systems have simply proven themselves to be outdated.

- Faster Time-to-Market: as a result of the streamlined development process, using a low-code ETL can help ambitious firms deliver their products, platforms, and systems much faster to the broader market. This is especially important for enterprises that are in the startup phase and are likely competing with other enterprises trying to launch similar systems. In some cases, using a low-code system can potentially reduce the estimated time-to-market by weeks, or even months. When an enterprise is operating at scale, that can make a huge difference.

- Improved Collaboration Between Developers and Non-Developers: prior to the introduction of low-code technology, essentially any team member who hoped to make changes to a platform or make changes to how data is stored would need to have at least coding experience. In most cases, especially for more sophisticated firms operating at a higher level, they would need to have formal training as a developer. But with low-code tech, the way that data is extracted, transformed, and loaded can be managed by just about anyone. This makes it significantly easier to collaborate within a team and for firms to achieve their goals.

- Easier Maintenance and Updates: even if your enterprise is confident in the original data storage system, you know that system will—eventually—need to be updated, maintained, and otherwise modified over time. Using a system that takes too long to make necessary changes can end up costing the enterprise thousands or even millions of dollars. But by leveraging a low-code system, firms can operate in a much more dynamic way and make all changes or adaptations whenever needed.

These are just a few of the reasons why a lot of data-dependent enterprises choose to utilize a low-code alternative every year. Not only are these systems much more affordable than their traditional counterparts, but they also provide a tremendous range of operational benefits, whether you are utilizing Apache Spark for ETL or any comparable platform.

How Low-Code Enhances Apache Spark Adoption

Now that we have discussed the broad benefits of using a low-code solutions, let’s take a closer look at how these systems, in particular, enhance the adoption of the increasingly popular Apache Spark.

- Simplifying the Learning Curve:these days, the operational value of any given system will be largely determined by whether a team is able to actually use it. Depending on the dynamics of the team—as suggested in the developer versus non-developer section—there might only be a small bunch of the team who can actually use the platform. Because low-code systems are so intuitive and accessible, it is much easier to get the entire team on board and moving in the right direction.

- Encouraging Wider User Adoption: when users are able to directly engage in their own data storage system, they will almost always be much more interested in actually using it. That’s why low-code systems, such as Visual Flow, have become so popular among firms of all kinds—there is simply no better alternative.

Keeping all of these things in mind, you might be wondering: what is the best low-code ETL tool to complement Apache Spark?

Let’s take a closer look.

Low-Code Tools for Apache Spark

Perhaps the best low-code tool for Apache Spark, as suggested, is Visual Flow. Through the use of Visual Flow, users can set up complex, manageable data pipelines without ever having to enter (or even understand) a single line of code. Additionally, when compared to many of its competitors, Visual Flow is often considered the option that offers the best overall combination of performance, accessibility, affordability, and scalability. No matter how much data your company might be working with, Visual Flow likely offers viable low-code solutions.

Of course, there are plenty of other low-code tools for Apache Spark that might also be worth considering. Depending on whom you ask, these might include OutSystems, Prohpechy, Zoho, ManageEngine, and various others. When comparing your options, be sure to consider your current needs, future data storage needs, budget, and general team dynamic.

The more you can do to understand your current position, the better off you will be. If the data you plan to extract, transform, and load is unusual in any way (perhaps it’s broken or stored in an unconventional way), you might want to take some time to seek out a more personalized solution. In some cases, using multiple different Apache Spark powered ETL tools might be in your best interest.

Once you have decided on a particular low-code ETL platform, the next step will be the integration process. As indicated, some of these solutions will allow you to get started in as few as 15 minutes. Of course, fine-tuning and adjusting the low-code solution will take a little bit more time, but being able to quickly get started is undeniably an advantage.

As time goes on, you’ll be able to measure the performance of your ETL processes, as well as the corresponding costs. Firms that are able to closely monitor the outcomes of their current strategies will be in a position for long-term success.

Conclusion

In this modern, highly competitive era, being able to effectively manage data is incredibly important. That’s why hundreds of millions of dollars—every year—are being dedicated to ETL and related processes. In the end, one of the most important things your enterprise will need to consider is productivity. Ask yourself, what am I able to get from my current Apache Spark ETL tools? If you are unsatisfied with the answer to this question, then it might be time to make a change.

FAQ

Low-code technology simplifies the learning curve and encourages wider user adoption of Apache Spark by reducing the need for advanced programming skills. With an intuitive visual interface and drag-and-drop functionality, users can quickly create and configure data pipelines. This opens up Spark’s powerful data processing capabilities to a wider audience, including data analysts and other non-programming professionals. Furthermore, it accelerates the development process, leading to faster deployment of data solutions.

Visual Flow is an ELT tool, that eliminates the need for handwritten code while dealing with Spark, thus resulting in significant time and cost savings. With the strategic implementation of ETL infrastructure, data management costs can be significantly reduced.

When selecting a low-code ETL tool for Apache Spark, consider its ease of use, flexibility, integration capabilities with existing systems, scalability, and cost. Also, check if it supports the specific data formats you work with and if it has reliable customer support.

Contact us