Table of Content:

Table of Content:

Tools for business analysts, apps for data engineers, platforms for streaming data in real-time, places to store historical information — you’re not the only one feeling bewildered by this chaotic tech stack. That’s why you’d likely be glad to find someone who can create a one-size-fits-all solution from this complex combination of technologies. The idea is to turn data into valuable insights, products, and services.

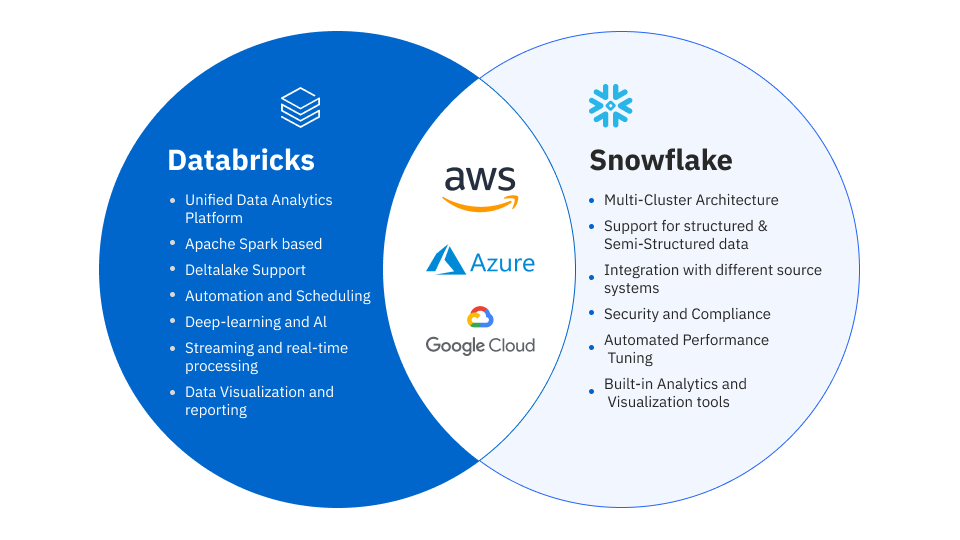

Databricks and Snowflake are currently the big names working to offer a unified, cloud-native data platform for all sorts of needs. Both companies started out operating in different areas of data management, but as they’ve grown, they’ve increasingly stepped on each other’s toes, triggering the Databricks vs Snowflake battle. While the platforms are moving to the modern Lakehouse concept (albeit at a different pace), they retain the strengths and weaknesses from their beginnings. We sliced and diced all the main aspects of these solutions to give you a clearer picture of their preferred uses. So, what is the difference between Databricks and Snowflake?

Databricks vs Snowflake: Data Warehousing and Architecture

- Data architecture

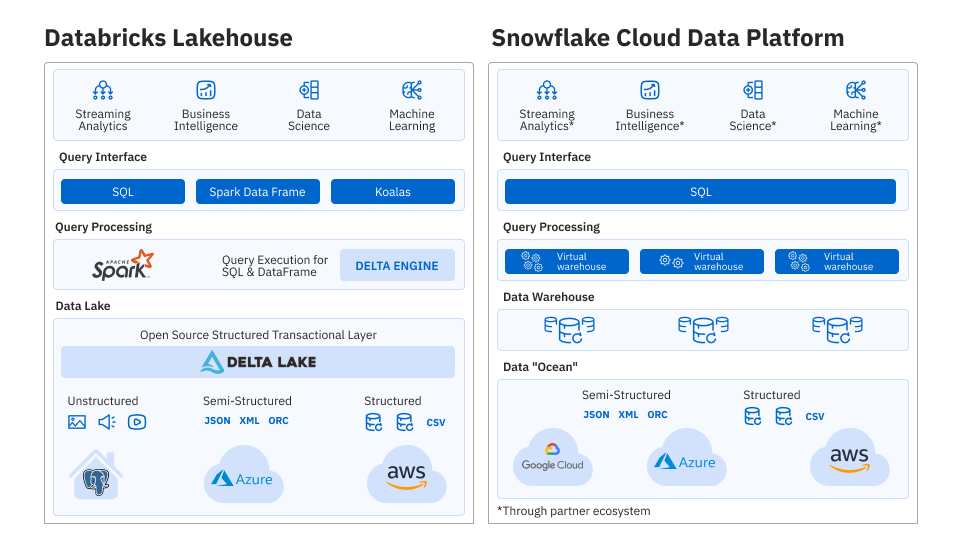

The primary difference between Databricks and Snowflake is the architecture at the root of their data warehousing solutions. Databricks uses a Lakehouse architecture. This setup incorporates Delta Lake, an open-source tool that acts as a smart storage layer for data lakes on major cloud services like AWS, Azure, and Google. Due to this design, Databricks can handle large volumes of raw data and supports both batch and stream processing. It’s perfect for real-time analytics, machine learning, data engineering, and data science.

Unlike Databricks, Snowflake is the new-gen SQL data warehouse, perfect for managing structured information. It takes the traditional warehouse and amps it up by using clusters of compute nodes called virtual warehouses. Snowflake also bridges the gap between warehousing and lake architectures by supporting Apache Iceberg, an open-source table format, and offers data lake management directly from Snowflake. However, the platform is still suitable for database management, batch processing, and the provision of industry-specific BI solutions.

- Data structures

Both Databricks and Snowflake support semi-structured and structured data, but when it comes to unstructured data like text, images, audio, and video, Snowflake doesn’t quite cover all the bases. Databricks, on the other hand, can handle a wider range of data types, which is a big advantage given how businesses today collect massive amounts of information in all sorts of formats. This capability can significantly improve the way businesses use their data.

- Table-level partition and pruning techniques

Now, let’s discuss the difference between Databricks and Snowflake in terms of optimizing query performance. When comparing Databricks vs Snowflake in this aspect, both employ distinct techniques. They organize data into tables for easier management and querying. Snowflake takes it a step further with micro-partitions — its unique way of storing and sorting data. These micro-partitions automatically arrange data rows into columns while capturing metadata that describes data distribution across units. When you run a query, Snowflake cleverly skips over any micro-partitions that don’t have the data you’re looking for and zooms in on the relevant ones, even filtering by column.

Now, who are Snowflake’s competitors in this aspect? Of course, it’s Databricks. The platform also supports table-level partitioning and splits datasets based on certain keys for further pruning. But if your table is less than 1TB, Databricks suggests skipping partitioning and advises the use of Z-ordering instead. This technique allows for faster reads by grouping related data, such as “category” and “price,” in the same set of files. It is possible to combine partitioning and Z-ordering for low cardinality fields and queries spanning multiple dimensions respectively.

- Platform focus

Another difference between Databricks vs Snowflake is seen in their approach to ecosystem building. Snowflake leans on third-party services, such as external dashboards and BI tools to expand its capabilities. To balance this reliance, Snowflake allows users to run any type of data application directly on its platform using Snowpark Container Services and its app marketplace. Databricks, on the other hand, prefers to offer ready-to-use solutions, so users don’t have to search for additional tools. For example, Databricks simplifies data lake management by replacing third-party data catalogs with its own Unity Catalog.

- Scalability

Databricks and Snowflake both excel in delivering high performance and scalability by separating storage and compute resources. Why is Snowflake better than its competitors in this area? It enhances its performance and simplifies data management due to a combination of shared-disk and shared-nothing architectures. This approach lets the data processing and storage layers grow independently. Snowflake also provides virtual warehouses that can change size as needed and automatically start or stop based on a workload. At the same time, the platform’s multi-cluster warehouses handle both fixed and changing resource needs.

Databricks also keeps everything organized by separating where data is stored and how it’s processed. It uses Amazon S3 for centralized cloud data storage and Apache Spark clusters for data processing. This setup is efficient but is a bit complex at the same time since it depends on customer configurations. Databricks offers a choice of computing resources for different use cases, along with flexible scaling options like autoscaling. Users get more flexibility but cluster creation and management become more challenging when comparing Databricks versus Snowflake.

- Migration to the platform

When it comes to ease of migration, Snowflake wins the Databricks vs Snowflake competition. Snowflake is closer to traditional data warehousing solutions that rely on relational tables since it’s designed on data warehouse architecture. Meanwhile, Databricks takes a different approach with its data lakehouse model. This setup requires more effort in terms of configuration and understanding how to tailor clusters and features to specific tasks. As a result, Snowflake wins this part of the Databrick vs Snowflake battle as getting started with Databricks feels a bit more involved than with Snowflake.

Databricks vs Snowflake: Data Engineering and Integration

- Data engineering

Databricks stands out in the Databricks vs Snowflake comparison in terms of data engineering features. It offers data engineers a great deal of customization through the use of Apache Spark. This is complemented by collaborative Databricks notebooks, powerful data pipelining capabilities for ETL workloads provided by Delta Live Tables, and other robust tools and cutting-edge companions like Visual Flow. Built in the cloud, for the cloud, Visual Flow leverages Databricks’ features seamlessly. Unlike many legacy or cloud-washed tools, Visual Flow doesn’t require deep coding skills — its intuitive graphical interface allows SQL-savvy analysts and architects to easily generate Databricks native code. This low-code/no-code platform boosts productivity and agility due to eliminating coding errors and shortening time to value. So, while Snowflake is a solid choice for data warehouses as it provides tools for creating data pipelines, its true strength lies in being a well-managed warehouse rather than a data engineering platform. That’s why Databricks, coupled with Visual Flow, outpaces Snowflake in this aspect of the Databricks vs Snowflake race.

- Data integration

Snowflake customers often rely on external data integration tools, while Databricks users process data directly in cloud storage. This point of the Databricks and Snowflake comparison is fairly even between the two. Both platforms offer a variety of ways to bring in data from various sources. Snowflake features the COPY INTO command for data loading and supports running SQL commands on a schedule with its serverless computing model. Additionally, it provides Snowpipe, a fully managed data ingestion service that automates data loading and offers connectors to external systems like the Kafka Connector for real-time data imports. It also works well with popular data integration tools like Fivetran, Stitch, and Airbyte.

Now, let’s compare Snowflake vs Databricks. Databricks also allows users to choose between Autoloader and COPY INTO. It uses Apache Spark Structured Streaming for efficient streaming and incremental batch data ingestion. To make integration better, Databricks provides native connectors for enterprise applications and databases and supports easy, low-code data loading through third-party tools like Visual Flow via Partner Connect. It also features managed volumes and Unity Catalog, similar to Snowflake tables. Interestingly, Snowflake has taken a page from Databricks by removing the need for ingestion when working with Apache Iceberg tables. That’s why there’s only one answer to the question, “Who are Databricks competitors?” — it’s just Snowflake.

- Data transformation

Databricks or Snowflake tools aren’t all that different in terms of data transformation. Both platforms support third-party solutions like dbt, Airflow, Dagster, and Prefect, and they can handle SQL workloads in virtual warehouses. Databricks even offers a traditional SQL warehouse in its Classic Compute Plane. Snowflake users often mix and match features like Tasks, Stored Procedures, Dynamic Tables, and Materialized Views to get their transformation done. On the other hand, Databricks customers set up Jobs and Tasks within Workflows and make use of Delta Live Tables to transform their data. So, when comparing Snowflake vs Databricks in this aspect, take into account your certain needs.

- Data processing

You probably want to know who are Snowflake’s competitors in data processing. Many users prefer Databricks to Snowflake for this purpose. This is because the Lakehouse solution typically uses powerful Spark Streaming and Structured Streaming. On the flip side, Snowflake doesn’t quite match up in the streaming department and is generally better suited for batch processing tasks.

- Query performance

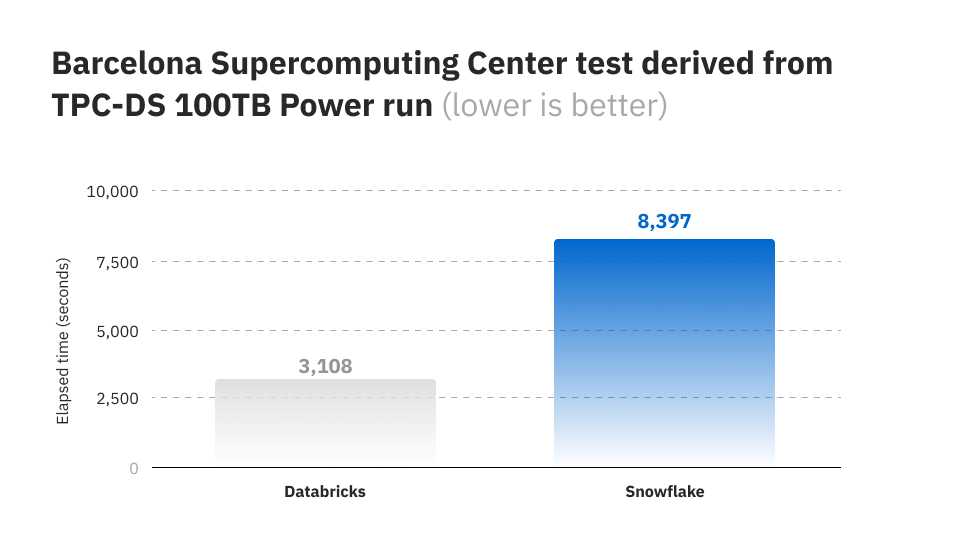

In our Databricks to Snowflake comparison, we touched on Snowflake’s virtual warehouses, which are standalone compute clusters equipped with a specific amount of CPU, memory, and temporary storage. Each of these warehouses runs its own set of nodes that execute SQL and DML operations in parallel, leading to impressive query performance. Snowflake’s design further boosts performance through features like columnar storage, caching, clustering, and optimization. Yet, despite these powerful features, it doesn’t necessarily mean Snowflake wins the Databricks vs. Snowflake challenge.

People used to think that warehouses always had the upper hand when it came to querying speed compared to Lakes and Lakehouses. But Databricks changed the game with its Delta Engine. This tool combines a query optimizer, a caching layer, and Photon — a C++ execution engine designed for massively parallel processing. Due to these innovations, Databricks has boosted both large and small query performances and even claims to have set a warehousing performance record, outpacing Snowflake in the process. On top of that, users can customize the data platform performance with optimization features like advanced indexing and hash bucketing.

In the Databricks vs Snowflake performance showdown, Snowflake tends to come out on top for interactive queries. This edge is largely because it optimizes storage right at the time of data ingestion. This is why Snowflake is better than its competitors. However, Databricks takes the lead when it comes to managing extremely challenging workloads and proves to be a strong contender in BI use cases.

- Data sharing

Both Databricks and Snowflake offer marketplaces that allow customers to share datasets, AI models, and other data assets securely, all without tiresome ETL processes and expensive data replication. Approaching the Databricks vs Snowflake competition from the maturity perspective, Databricks entered the marketplace scene a bit later than Snowflake, which launched back in 2019, so Snowflake seems more established. However, Snowflake’s sharing capabilities are somewhat confined, as they only allow sharing within its customer base due to their proprietary system. In contrast, Databricks supports broader, cross-platform sharing thanks to its open-source Delta Sharing protocol.

- Data governance and management

It’s important to understand the difference between Databricks and Snowflake in terms of data governance and management. Databricks offers its Unity Catalog as a way to streamline access control, keep track of data lineage, and manage both data and AI assets. It’s also useful for setting up identity and audit logging. System tables are provided to track account usage and explore billable usage records, SKU pricing logs, compute metrics and other operational data. However, these tables only display Databrick’s costs and don’t provide insights into cloud expenses, which is an area of the Databricks vs Snowflake battle where Snowflake excels. Snowflake’s cost management suite stands out with tools like budgets, resource monitors, and rich object metadata, all aimed at keeping tabs on spending. Besides, the platform has bolstered its data governance with Snowflake Horizon, a built-in solution for data security and compliance.

- Deployment and management

Databricks is often celebrated for its flexibility and the wealth of customization it offers. However, setting it up is a difficult task, especially for those without a technical background, due to the numerous configurations and options available. This complexity makes it challenging to optimize performance and manage resources. Unlike Databricks, Snowflake is known for its ease of use. As a fully managed SaaS platform, it offers a straightforward setup with a familiar SQL approach and an intuitive interface.

In the first part of our Databricks vs Snowflake comparison, we explored their key architectural differences and how each platform handles data. Up next, we are going to compare their AI capabilities, collaborative features, costs, and some other properties.

Part 2

Databricks and Snowflake are two leading platforms trying to stitch together the best features of data lakes and data warehouses. In our review, we compare the platforms across a wide range of features and explore which one is more successful in this journey. Be sure to read the first part of this Databricks vs Snowflake comparison, and keep reading to find out our final take on who comes out on top.

Databricks vs Snowflake Analytics and ML/AI integration

- Analysis and reporting

Databricks and Snowflake both offer a similar lineup when it comes to third-party tool integrations for business intelligence and analytics. They’ve got all the big names covered, including Tableau, Looker, and PowerBI.

On the Snowflake side of things, there’s Snowsight, a web interface for crafting dashboards and visualizing query results with charts. Also, Snowflake provides access to LLM-powered Copilot for streamlining data analysis, and it even offers Streamlit, an open-source Python library, for those who want to build custom BI apps.

Databricks makes exploring data easy with advanced visualization tools available in both Databricks SQL and notebooks. Plus, users can analyze data on their own with its AI/BI product. This product features an easy-to-use, AI-powered dashboard that requires little coding knowledge. Then, there’s Genie, a smart conversational interface, that offers interactive visuals and personalized suggestions.

- ML/AI

Databricks comes out on top in the ML/AI aspect of the Databricks vs Snowflake competition. It is the all-in-one toolkit for building complete AI/ML pipelines as it integrates with cloud data storage and allows for full-scale data lake management. It’s also equipped with machine learning libraries like MLlib and Tensorflow — basically, everything you need to train, deploy, and keep an eye on your models. If you prefer Python, you’ll appreciate Databricks’ strong support for it. It also includes MLflow, a formidable open-source MLOps platform, along with AutoML, a low-code automation tool.

Unlike Databricks, Snowflake has taken a different route when it comes to AI and ML capabilities. Instead of creating one big ecosystem, it’s possible to use the features of Snowflake between various products. For instance, Snowflake offers a collection of large language models through Cortex. They also provide ready-to-use ML Functions to streamline ML workflows in SQL. If you’re into Python, you can tap into the APIs available in the Snowpark library to craft custom ML workflows. Users can also deploy their ML models directly in Snowflake via the recently released Snowpark Container Services.

- Collaboration and notebooks

So, who are Databricks competitors in this area? There’s no certain answer. Both Databricks and Snowflake both offer ways to collaborate through notebooks (for example, Snowflake added this opportunity in 2024). However, Databricks takes the lead with a more robust interactive programming solution that supports Python, SQL, Scala, and R. It also facilitates teamwork and development by connecting Databricks clusters to popular IDEs like Visual Studio Code, PyCharm, and IntelliJ IDEA via Databricks Connect. While Snowflake doesn’t have the same level of built-in collaboration, it does come with plenty of integrations with third-party tools.

Databricks vs Snowflake: Security and Compliance

There is no difference between Databricks and Snowflake when it comes to data security and compliance. The main difference is in data management: Databricks keeps your data on a network you manage, while Snowflake stores it on its network. Both Databricks and Snowflake support data encryption at rest and in transit, role-based access control, multi-factor authentication, network isolation, and auditing. They also boast compliance credentials, like HIPAA, GDPR, and FedRAMP. Regarding enhanced security features, Snowflake offers Dynamic Data Masking for sensitive data protection, while Databricks provides a data lake firewall, integration with Azure Active Directory for authentication and authorization, and an automated Security Analysis Tool.

Databricks vs Snowflake: Costs and User Experience

- Costs

Databricks and Snowflake are cost-effective data solutions because they can scale up and down as workloads change. The primary difference is in their pricing models. Databricks follows a pay-as-you-go approach, so users only pay for the resources they use. On the other hand, Snowflake requires you to select from a set of preconfigured compute resources. This setup is convenient but can lead to unused resources and therefore wasted money.

On the other hand, Databricks has a rather complicated pricing structure. It factors in cloud data storage, Databricks services, and cloud computing costs. Meanwhile, Snowflake’s pricing consists of data storage and computing. Don’t forget to consider the human effort involved — since Databricks is more difficult to set up and manage, you may see higher labor costs. Overall, Snowflake generally comes out cheaper when you’re dealing with large computing volumes. In contrast, Databricks offers more consistent and predictable costs, so that it’s more adaptable for organizations of different sizes.

- Expertise

Snowflake is the top pick for data analysts and SQL-focused developers due to its support for Java, Scala, and Python. On the other hand, Databricks caters to a broader crowd, welcoming analysts, data scientists, and data engineers into the fold. However, it does call for some strong technical chops, especially in Python, Scala, and R.

- Service model

Another key difference between Databricks and Snowflake is how they offer their services. Databricks provides a PaaS (platform as a service) model, giving users more flexibility and control over their data. On the other hand, Snowflake operates as a SaaS (software as a service) platform, and that’s why it is easier to use.

- Vendors

Databricks and Snowflake both play well with major cloud platforms, such as AWS, Azure, and GCP. However, Databricks has a few issues with some of its tools — like Delta Sharing and MLflow — not working seamlessly across all its clouds. Meanwhile, Snowflake boasts about its multi-cloud capabilities, but the reality is a bit different due to vendor lock-in challenges.

- UI

Snowflake provides a web-based easy-to-navigate graphical user interface called Snowsight to query and manage data. On the flip side, Databricks offers a graphical interface for interacting with workspace folders and a web-based notebook interface for data exploration, visualization, and analytics. However, be ready for a bit of a learning curve, as it’s tailored for users with a technical background.

- Reviews

The Databricks vs. Snowflake review analysis shows that users appreciate both platforms, with 74% of them rating these platforms over four out of five stars. However, Databricks edges out a bit ahead in the positive review department.

- Market share

There isn’t much-verified info on Databricks and Snowflake’s market share in data platforms. However, some sources estimate Snowflake’s market share in data warehousing solutions is approximately 22% while giving Databricks nearly 16% of the big data analytics market.

- Isolated tenancy

Both Databricks and Snowflake offer customers plenty of choices when it comes to obtaining dedicated resources. Snowflake supports multi-tenant pooled resources along with the option for isolated tenancy through their Virtual Private Snowflake (VPS) tier. On the other hand, Databricks lets customers use the control plane in their accounts, alongside the data plane and storage in a VPC. They also provide serverless SQL that operates within a multi-tenant setup.

READY TO EMPOWER YOUR TEAM WITH DATABRICKS AND SNOWFLAKE EXPERTS?

Databricks vs Snowflake: Bottom Line

Databricks and Snowflake started in different corners of the data world, but they’re becoming quite the competitors lately. Snowflake remains primarily a data processing and data warehousing solution, while Databricks is carving out a name for itself as a leader in data and AI. Yet, the lines are blurring as Databricks ramps up its query performance and Snowflake dives into machine learning. Nowadays, Databricks and Snowflake are working hand in hand, with Databricks being used as a data lake management solution for unstructured data and powering ETL pipelines. This collaboration results in neatly organized data for data processing in Snowflake.

Just knowing how Databricks is different from Snowflake is not enough to build complex integrations or choose the best feature suite for your needs. So, why not get a free consultation from experienced data engineers at IBA Group? They boast rich experience in crafting all kinds of custom data warehousing solutions. Specify the details of your problem in the form, and we’ll brainstorm a cost-effective data solution to help you out.

Contact us