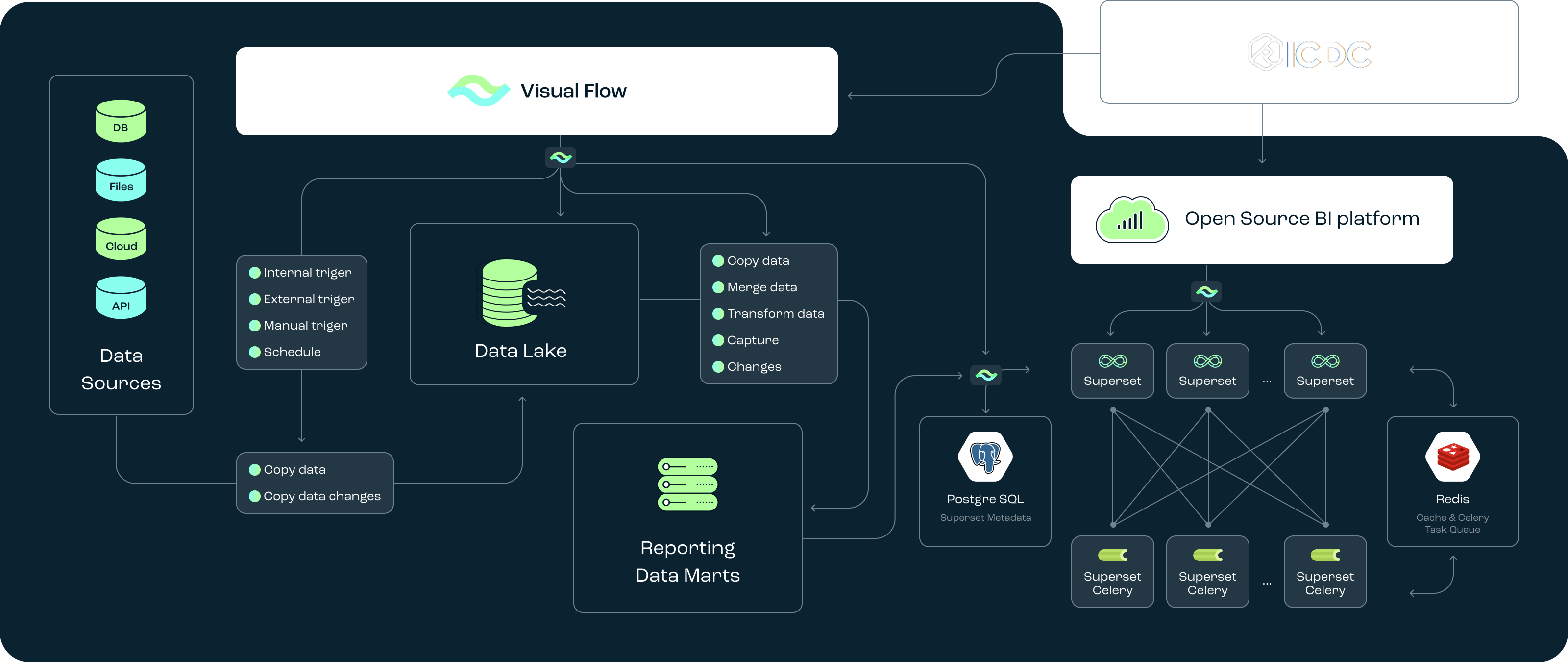

Visual Flow ETL Tool - How It Works?

ETL Tool for Asana

Connecting Asana to Databricks

This connection will enable you to pull data from Asana to Databricks and vice versa for data analysis and workflow automation.

This setup will allow you to use Databricks’ analytics capabilities to gain insights from your Asana data. To get a more detailed guide on how to connect Asana to Databricks, Visual Flow provides full-scale, comprehensive ETL migration consulting services that will help you take the most out of Asana and Databricks integration.

Here’s a short guide on how to establish this connection:

- Ensure that your Databricks environment has the necessary tools to interact with APIs. Typically, the requests library is used for making HTTP requests.

- Set up authentication. To authenticate your API requests, you will use the personal access token (PAT) you generated earlier. Store this PAT securely in Databricks using the platform’s secret management feature (you can do this in the “Secrets” section).

- Fetch data from Asana.

- Load data into Databricks.

- Automate data import (you can achieve this by scheduling jobs in Databricks).

ETL Data from Asana  Extracting, transforming, and loading data from Asana is not complicated even for beginners. However, if you’re not sure you can do this yourself and need additional assistance in Asana and Databricks integration, you can take advantage of our data engineering services.

Extracting, transforming, and loading data from Asana is not complicated even for beginners. However, if you’re not sure you can do this yourself and need additional assistance in Asana and Databricks integration, you can take advantage of our data engineering services.

Extracting Data

Use tools like Postman, Insomnia, or any API client to make API requests to Asana and download the required data in CSV or JSON format. Save the responses from these API requests as CSV or JSON files on your local machine.

Transforming Data

Use a data transformation tool or Excel to clean and format the data.

- Open the CSV or JSON files in Excel or your preferred data manipulation tool.

- Remove duplicates, handle missing values, and ensure consistency.

- Ensure the data is in a tabular format with appropriate column headers.

Loading Data

Upload the transformed data files into Databricks and create tables.

- Log in to your Databricks workspace.

- Navigate to the “Data” tab and click on “Add Data”.

- Upload the CSV or JSON files you prepared in the previous step.

- In the “Data” tab, click on “Create Table”.

- Follow the prompts to create a table from your uploaded file.

- Define the schema by specifying column names and data types.

- Create a scheduled job in Databricks to automate the data-loading process.

- Set the frequency at which the job should run (e.g., daily, weekly).

This is how you can set up a robust ETL process to extract data from Asana, transform it into a suitable format, and load it into Databricks for analysis.

Extracting Data

Use tools like Postman, Insomnia, or any API client to make API requests to Asana and download the required data in CSV or JSON format. Save the responses from these API requests as CSV or JSON files on your local machine.

Transforming Data

Use a data transformation tool or Excel to clean and format the data.

- Open the CSV or JSON files in Excel or your preferred data manipulation tool.

- Remove duplicates, handle missing values, and ensure consistency.

- Ensure the data is in a tabular format with appropriate column headers.

Loading Data

Upload the transformed data files into Databricks and create tables.

- Log in to your Databricks workspace.

- Navigate to the “Data” tab and click on “Add Data”.

- Upload the CSV or JSON files you prepared in the previous step.

- In the “Data” tab, click on “Create Table”.

- Follow the prompts to create a table from your uploaded file.

- Define the schema by specifying column names and data types.

- Create a scheduled job in Databricks to automate the data-loading process.

- Set the frequency at which the job should run (e.g., daily, weekly).

This is how you can set up a robust ETL process to extract data from Asana, transform it into a suitable format, and load it into Databricks for analysis.

Try Visual Flow – Asana Databricks Integration for your data project

Example of Structure Our Visual Flow ETL

The team  you can rely on

you can rely on

Other Visual Flow's Tools

Dive deeper into Visual Flow and feel free to co-create

Contact us