Table of Content:

Table of Content:

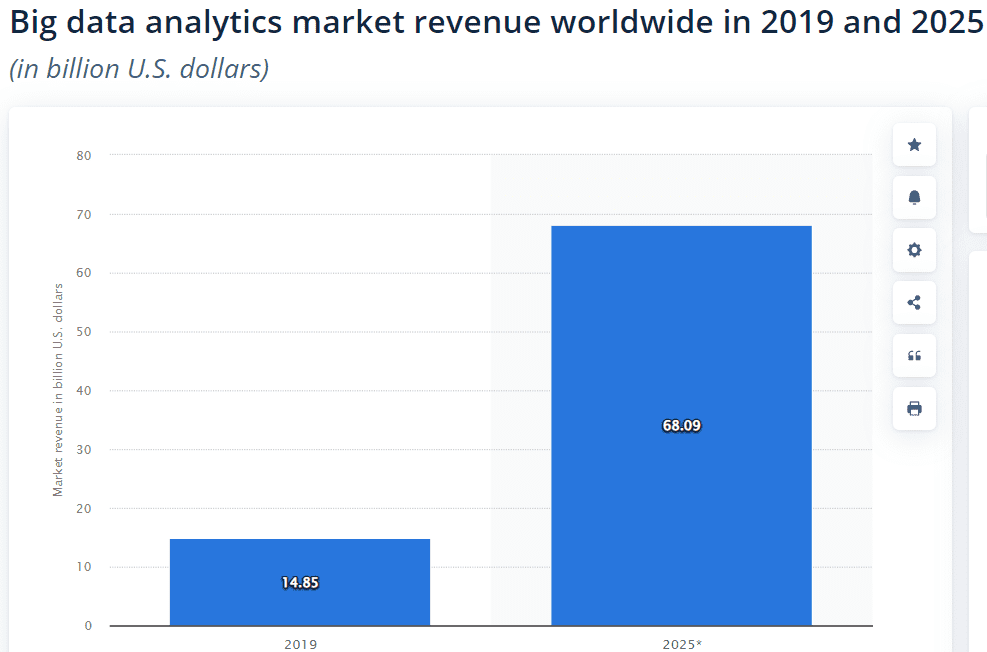

With the growing popularity of big data projects, ETL has become a common approach for data management. The extract, transform, and load concept allows engineers to analyze data easily. ETL is widely used for Business Intelligence in different domains, from fintech to food tech and retail. The article compares two ways of creating ETL pipelines to determine which works better for specific projects. Let’s compare how to build ETL pipelines using SQL, and how to apply Apache Spark to complete the task.

Using Spark for Creating ETL Pipelines

Apache Spark is an open-source programming instrument based on the concept of distributed datasets. It provides frameworks for creating ETL pipelines and automating data-driven business decisions. Let’s see how to use them.

Data Extraction

During the first stage of data processing, an engineer needs to extract features from “raw” pieces of information. He writes code that fetches the data lake, filters the required data, and finally re-partitions the data subset. As a result, the extracted DF is prepared for the transformation.

Data Transformation

The second stage of the ETL process involves creating a high-quality database. An engineer can achieve this by creating a custom transformation function that takes a DF as an argument and returns a different DF to replace the extracted DF.

Data Loading

The last step is called data report or data load. An engineer uses the Spark DF writers to define a function that writes a DF to a given location in Amazon Simple Storage Service (or other data storage).

Why Use Spark?

Spark is considered one of the best among ETL tools. Spark is a pretty quick and convenient way to conduct the ETL process, built especially for processing big data via clustered computing. Here are the main reasons for the importance of Spark for ETL compared to other tools.

Processing enormous amounts of data

It’s a perfect solution for large-scale data analytics because Spark can sort 100 terabytes of “raw” data in less than half an hour. Furthermore, it’s very convenient to integrate Spark with any file system. For example, there is the support of HDFS, S3, or MongoDB.

Reducing security risks

The multi-language engine supports different deployment types. The security level depends on the custom configuration. There are possibilities to turn on authentication and authorization on the web UI, configure SSL, and event log-in. At the same time, Spark is a private tool that isn’t deployed on the public Internet.

Effective teamwork in big data projects

Spark is also convenient for big enterprise projects, as it’s an easy-to-configure, fast, and versatile ETL tool. The engine demonstrates good speed and high performance, even processing a large amount of data for a big data science team.

Examples of ETL Projects Where It Is Better to Use Spark

Talking about cases of using Spark, it’s primarily cloud computing. The thing is, Spark allows engineers to save money when there is a great COS loading. That’s especially important if COS is used not only as a transmitting source but as a data source (together with a relational database) for streaming or machine learning processing.

Using SQL for Creation ETL Pipelines

Structured Query Language is quite helpful in the first stage of creating ETL pipelines, which is extraction. But it also can be used instead of Apache Spark for some kinds of projects. Here are 3 stages of the creation of ETL pipelines using SQL in big data projects.

Data Extraction

Popular database management systems, such as Oracle, MySQL, Microsoft SQL Server, Postgres, and Aurora, widely use SQL. So, it’s easy to take almost any data source and extract data with the help of SQL commands.

Data Transformation

SQL commands also allow engineers to transform data in a particular way. There are many options, like calculating, joining, or removing data. It depends on the business needs. It’s very convenient when an ETL tool already provides a pre-written SQL code for data transformation.

Data Loading

Data loading means making SQL reports or just putting data into databases.

Why Use SQL?

SQL is a basic part of any ETL process, as most current databases are SQL-based. Let’s see in which cases it’s especially useful.

Effective data warehousing

Complex data processing requires SQL for ETL pipelines and data warehousing. This means taking “raw” data from different sources of information and making insightful reports based on the results. It’s effective for BI projects and making data-driven business decisions.

For projects that require quick solutions

SQL for ETL pipelines creation is a simple solution. The thing is, SQL script is easy and quick to write, even by Junior data engineers. They help to fetch data from almost any data source. It could be a spreadsheet, as well as different tables and databases.

Requiring cheap solutions

Projects with simple application architecture do not need special ETL tools. In some cases, the engineer can just write SQL commands to get the results. We’ll check such an example of SQL for ETL creation in the article.

Examples of ETL Projects Where It Is Better to Use SQL

Integration of social media ads is one of the most common cases of using SQL for ETL creation. In this situation, the data warehousing tools use SQL to make reports about clicks, views, money spent on advertising, and other metrics. The output is demonstrated on the dashboards in the admins’ panel.

Create ETL Pipelines Using Only SQL: Is Spark Really Needed for ETL?

That depends on the project’s architecture. Answering the question: “Is it true that you can use just SQL-written data for ETL pipelines?”, it’s possible to say: “Yes, with cloud services like Azure or AWS”.

SQL helps to avoid additional costs in the data processing

This works for projects that have the most load on services (Spectrum and Redshift). In this case, the minimal task is just to send SQL data. This specific architecture doesn’t require flexibility for processing enormous amounts of data. But in other cases, you need to use Spark or its alternatives.

How Can We Help?

If you are looking for a reliable digital partner, Visual Flow is ready to help you. The agency specializes in big data projects and is capable of meeting all quality expectations. With international awards and numerous client testimonials, the team is the best choice for solving any technical problems. The team also provides smooth communication with highly skilled data science professionals.

Founded in 2020 by top talents in the engineering market, Visual Flow will meet your business needs and provide any additional services needed. The company’s founders are experienced in IBM CDP and IBM Watson, while the digital agency provides exceptional services for customers worldwide.

The main ways of cooperation are:

- BI services and consulting

- ETL migration consulting services

- Data engineering and consulting

- Data Science

Check Visual Flow blog posts to see the proven expertise.

Final thoughts

ETL projects can be built on low-cost SQL solutions without the usage of Spark. Do you want to discuss your project details? Let’s get in touch. Visual Flow provides the help of top engineering professionals to achieve the best results for your business.

FAQ

Apache Spark is a mainstream tool that provides a convenient framework for the ETL process. But that isn’t the only one. You can use SQL commands to extract, transform, and load data from a data source to a database. There are other open-source, custom, and enterprise SQL tools as well.

Yes, using SQL for ETL pipelines is possible. Most of the mainstream ETL tools use SQL for data processing. But it depends on the project’s architecture and data load.

If you are interested in tips for creating an ETL process, check the article on SQL for ETL pipelines. You’ll learn how to create reference data, extract, validate, transform, and publish. It’s hard to imagine creating ETL pipelines without SQL.

Spark is a powerful engine that allows data engineers to create ETL pipelines and process large amounts of data in a short time. It is a user-friendly, versatile, and secure tool for data warehousing, business intelligence, and advanced analytical projects.

Contact us